The Docker slogan ‘Build, Ship, and Run’ advertises easy set up of immutable software builds, but it is not always that easy. Setting up immutable builds with Docker is pretty straight forward and shipping is just a matter of pushing the image to a repository. The next step is building the cloud infrastructure to run the containers. In the talk at the DevOps Days in Riga I have shown how to create an immutable infrastructure on AWS with Terraform. The example belows shows how you can run your micro services in docker containers on AWS.

In this talk I briefly touch upon building immutable software. But the main focus of the talk will be on creating an immutable infrastructure. In this talk I will show you how to create an immutable infrastructure on AWS with Terraform. I will use a real world example to explain and show live how easy you can get micro services live on AWS and continuously apply changes to the same cloud environment..

Slides

Below the slides that I used for the talk, the slides are available as well on GitLab. You can easy navigate through the slides with the spacebar.

To get started with Terraform the best starting points are:

Examples

Hello World

During the talk, I demonstrated a hello world example, see the link above. This examples shows some basics of terraform by creating a ec2 instance and security group to server a simple web application. The example only works in the AWS region eu-west-1 since the AMI used is only available in this region.

ECS demo

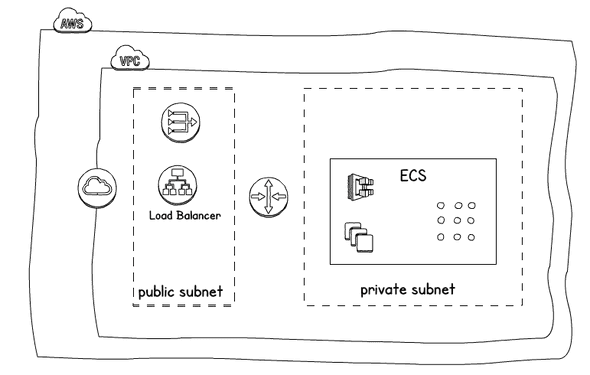

The second example I have shown, is creating an immutable infrastructure to server docker containers. The picture below descibes briefly the enviroment that will be cretaed.

The example also creates log groups in cloudwatch to capture the logging of the ecs agent and the running containers (services).

Setup

Before you can start, you need an AWS account with sufficient rights (admin), and you should create a AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY to be able to access your account programmatically.

Clone the repository containing the ECS sample terraform code. To create the infrastructure step-by-step you can check out the tags vpc and ecs.

git clone https://github.com/npalm/tf-ecs-demo.git

git checkout vpcYou should also have Terraform installed (home brew install terraform) or use a docker container to run the terraform commands, for example:

docker run -it --env-file <AWS_KEYS_FILE> -v $(pwd):/data -w /data \

hashicorp/terraform:0.10.4 <terraform command>Create network layers (VPC)

In the next steps we will create the network layers.

First, we initialize and plan our changes.

terraform init

terraform planTerraform should print on the console that 19 needs to be added and 0 to change or destroyed. Next we apply the change be executing terraform apply.

Add ECS cluster

Now we have the network layer created, we will add the ECS cluster. By default the bastion host is disabled, the bastion can be enabled by updating the variable enable_bastion in the terraform.tfvars file. Time to plan and apply the new resources for ECS.

git checkout ecs

terraform plan

terraform applyOnce terraform is ready it will print the url of two applications on the console. One application, is a simple micro service that just prints the AWS availabilty zone where it is running, the other is a graphql micro service. It will take a few minutes before the services are available.

Adding your own service.

Time to have some more fun. Edit the main.tf and start making changes. Remove all the services, add your own service. By default for each service an application load balancer (ALB) is created. Add for each services that you want to deploy a configuration as below and update the variables as required.

module "your-service" {

source = "ecs-service"

service_name = "<service-name>"

image_url = "<docker-image>"

container_port = <port in the container where the service is listening>

desired_count = <number of instances>

aws_region = "${var.aws_region}"

environment = "${var.environment}"

vpc_id = "${module.vpc.vpc_id}"

subnets = "${module.vpc.public_subnets}"

cluster_id = "${module.ecs-cluster.cluster_id}"

ecs_service_role_name = "${module.ecs-cluster.ecs_service_role_name}"

}Now plan and apply your changes, Terraform will inform you that a few resources will be destroyed (the removed services) and a few will be added.

Clean up

Once done you can easily clean-up all created resources in AWS, just run terraform destroy.