This post explains how to run and debug AWS Lambda’s locally without mocking and using the same permissions as running in the cloud.

Contents

The problem

Imagine you are building an AWS serverless function. As a good developer you have designed and written your code with paradigms like clean code and TDD. Your code is clean, maintainable and well (unit) tested. But the moment you deploy your function to the cloud, it does not work as you have expected. Would it not be great if you can locally run, debug and iterate fast. And when deploying to the cloud be 100% certain that your lambda will work smooth?

So what is the problem? The real world is not so nicely isolated as your test environment for unit testing. There are no mocks or stubs. The environment could be different. The function is invoked differently. And IAM permissions are applied once running in the cloud. Of course, this is the difference mostly between a unit test and integration test environment.

Time to find out how close can we get to behavior in the real world, aka cloud while running as much locally.

Tools

For this post we use the following tools.

- VSCode for code editing, the linked repo contains a list of suggested plugins.

- AWS CLI, Amazon command line interface.

- AWS SAM CLI, Amazon Serverless Application Model to run local.

- Terraform, as IaC languages to create cloud resources.

- Yarn or npm a package manager for JavaScript.

- Serverless Framework, to test the Lambda locally.

- Docker, required by AWS SAM.

A simple Lambda

Let’s first build a simple Lambda. We use TypeScript as language for the Lambda which requires a Node runtime environment for execution. This add an extra step between writing the code and running, we need to compile the TypeScript code to JavaScript.

We write a simple program that sends a message to a SQS queue, Amazon’s Simple Queue Service. Actually it does not matter which AWS services we use. We only need integration points to the cloud, so we are also depending on the cloud services for local excution. For simplicity we limit ourselves to only one.

Our tiny program will read the SQS queue url and a message from the environment. If no message is set the famous string hello world! will be send to the queue.

import { SendMessageCommand, SQSClient } from "@aws-sdk/client-sqs";

import { Context, ScheduledEvent } from "aws-Lambda";

const client = new SQSClient({});

export async function handler(

event: ScheduledEvent,

context: Context

): Promise<void> {

try {

const queueUrl = getEnvVariable("QUEUE_URL");

const message = getEnvVariable("MESSAGE", "Hello World!");

const command = new SendMessageCommand({

QueueUrl: queueUrl,

MessageBody: message,

});

const response = await client.send(command);

console.info(`Message sent with message id ${response.MessageId}`);

} catch (e) {

console.error(e);

}

}

}Writing a unit test is straightforward by mocking the AWS SDK v3 SQSClient with aws-sdk-client-mock . That part will test the logic.

import { handler } from './lambda';

import { mockClient } from 'aws-sdk-client-mock';

import { SendMessageCommand, SQSClient } from '@aws-sdk/client-sqs';

import { event, context } from './test-data';

const mock = mockClient(SQSClient);

describe('Test lambda.', () => {

it('Should sent message.', async () => {

mock.on(SendMessageCommand).resolves({

MessageId: '',

});

process.env.QUEUE_URL = 'https://somequeue.url/123';

process.env.MESSAGE = 'test';

await expect(handler(event, context)).resolves;

});

});While, mocking is a great way to check the logic, it does not guarantee that an actual deployment in the cloud will work. For testing the lambda working with a realistic scenario, connected to dependent services, integration testing is a common practice. But integration testing is not always speeding up the development flow. Sometimes we need an easy way, to check quickly the code changes. For this reason we explore running a test locally, so we can easily adapt changes and connect to a debugger.

Required cloud resources

The Lambda function above requires in terms of AWS the following resources.

- A Lambda function, this we will not create since we focus on running locally.

- A SQS queue, the queue to which we publish message. Since the goal is to run the Lambda as realistic as possible, without any mocking, we need an actual SQS queue.

- An execution role, this is the role that defines which resources the Lambda can access. The Lambda needs to send messages to SQS. We also going to use this role locally.

Today we have dozen of ways to create cloud resources, manually via the web console, AWS CloudFormation, Terraform, AWS CDK, Pulumi, Serverless Framework, e.g. Here we will use Terraform which is an IaC eco-system with no extra tooling to streamline Serverless development. When developing only a serverless application the SAM Framework, CDK or the Serverless Framework may seem a more logical choice. But using Terraform shows us how we can mix and match if we cannot chose every component.

Time to create the cloud resources with Terraform. This setup assumes you have an admin role. Clone the repo and cd in the Terraform directory to create the resources.

Expand for Terraform resources

First we create the role, we add an extra principal to allow you to switch to the role used by the Lambda.

locals {

namespace = "blog"

}

resource "aws_sqs_queue" "test" {

name = "${local.namespace}-test"

}

resource "aws_iam_role" "Lambda" {

name = "${local.namespace}-test"

assume_role_policy = data.aws_iam_policy_document.assume.json

}

data "aws_caller_identity" "current" {}

data "aws_iam_policy_document" "assume" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["Lambda.amazonaws.com"]

}

# Assume you have admin rights, this allows you to switch locally to this role.

principals {

type = "AWS"

identifiers = ["arn:aws:iam::${data.aws_caller_identity.current.account_id}:root"]

}

}

}Next, we create a policy that grant the Lambda the required access to AWS resources, in this example the SQS queue.

resource "aws_iam_role_policy" "sqs" {

name = "runner-ssm-session"

role = aws_iam_role.Lambda.name

policy = data.aws_iam_policy_document.sqs.json

}

data "aws_iam_policy_document" "sqs" {

statement {

actions = [

"sqs:SendMessage",

"sqs:GetQueueAttributes"

]

resources = [

aws_sqs_queue.test.arn,

]

}

}

Finally we print the outputs required later.

output "test" {

value = {

queue_url = aws_sqs_queue.test.url

Lambda_role = aws_iam_role.Lambda.arn

}

}Ensure you are logged in to AWS and have the correct profile active, now run:

terraform init

terraform applyYour output should look like this:

Apply complete! Resources: 3 added, 0 changed, 0 destroyed.

Outputs:

test = {

"lambda_role" = "arn:aws:iam::<ACCOUNT_ID>:role/test/blog-test"

"queue_url" = "https://sqs.eu-west-1.amazonaws.com/<ACCOUNT_ID>/blog-test"

}The last step of the cloud setup is to configure your AWS profile in <user_home>/.aws/config. This profile make it easy to assume (switch) the role we have created to execute the Lambda.

[profile admin-role]

role_arn=<....>

region = eu-west-1

[profile blog-test]

source_profile=admin-role

region=eu-west-1

role_arn=arn:aws:iam::<ACCOUNT_ID>:role/test/blog-testAs alternative of setting up a role you can invoke the STS services to obtain credentials for the Lambda execution role.

role=$(aws sts assume-role --role-arn "$role_arn" \

--duration-seconds 3600 --role-session-name "test")

export AWS_ACCESS_KEY_ID=$(echo $temp_role | jq -r .Credentials.AccessKeyId)

export AWS_SECRET_ACCESS_KEY=$(echo $temp_role | jq -r .Credentials.SecretAccessKey)

export AWS_SESSION_TOKEN=$(echo $temp_role | jq -r .Credentials.SessionToken)By now you should have created a SQS queue and a role for Lambda in your AWS account. There is no Lambda deployed at all. You can test your setup with the AWS CLI.

# send

aws sqs send-message --queue-url <QUEUE_URL> --message-body "Test" --blog-test

# receive with wrong profile, so causes an error

aws sqs receive-message --queue-url <QUEUE_URL> --profile blog-test

# receive with your admin role

aws sqs receive-message --queue-url <QUEUE_URL> --profile admin-role

# purge messages on the queue, should be empty but already

aws sqs purge-queue --queue-url <QUEUE_URL> --profile admin-roleEnough said about creating infra and testing, remember we just showed a way to create the cloud resources with IaC. Feel free to use your own preferred (or required) framework.

Testing locally

For running the Lambda locally, we explore several options. Let’s first list them briefly. The list will not be complete but should include the most logical options.

-

Using NodeJS: Running the Lambda locally in NodeJs, for example using a watcher like nodemon. Invoking the Lambda needs to be faked by calling the handler function.

-

Using SAM: Run the Lambda function using AWS Serverless Application Model (SAM). The function will run via SAM and we can just pass an event to the function, similar to running in the cloud.

-

Using Serverless Framework: Like AWS SAM, the Serverless framework simplifies the process of building Serverless applications, including Lambda. The framework also provides an option to run the function locally.

NodeJs

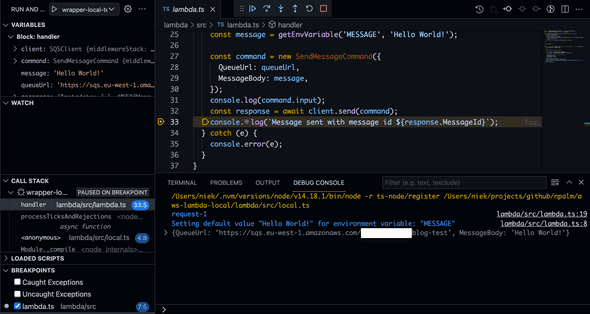

We try to keep as close to the NodeJS ecosystem. To run the Lambda locally we will start a small wrapper program via a nodemon watcher to invoke the Lambda on every single code change. Or just start the program after each change without a watcher.

Generate the sample event with SAM. Note that the sample event does not match the TypeScript definition of the event, the version property was missing.

sam local generate-event cloudwatch scheduled-eventNext we write a small wrapper, check the source code for the full details.

import { Context, ScheduledEvent } from "aws-Lambda";

import { handler } from "./Lambda";

const event: ScheduledEvent = {

...

};

const context: Context = {

...

};

handler(event, context)

.then()

.catch((e) => {

console.error(e);

});

This wrapper we can use to directly start in VSCode via a launcher, check the config here. This will make debugging your code trivial.

Another option would be using nodemon in a watch mode and re-run after every change, run yarn watch:local. Ensure you have set the correct AWS profile in the terminal before you run the yarn command.

Serverless framework

The Serverless Framework let you develop and deploy serverless stacks to various clouds. Here we only quickly explore if we can use the framework to invoke the function locally.

The framework requires you define your function in a serverless.yml. Below we define the test function in a serverless manifest.

provider:

name: aws

runtime: nodejs14.x

LambdaHashingVersion: 20201221

functions:

BlogTest:

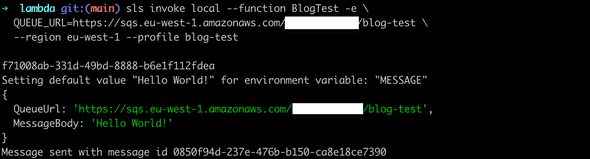

handler: dist/index.handlerBefore we invoke the function ensure you have not set the environment variable AWS_PROFILE. And run yarn run build to generate the JavaScript dist bundle.

sls invoke local --function BlogTest -e QUEUE_URL=<QUEUE_URL> \

--region <REGION> --profile blog-test

We leave it by here, and not cover integration in VSCode, and or attaching debuggers.

AWS SAM

The last option we explore is AWS SAM, the framework provided by AWS for building serverless applications. Amazon provides a VScode plugin, AWS toolkit as plugin to easy integrate SAM into VSCode.

For SAM applications needs to be defined in a manifest file named template.yml. Below a minimal version of the SAM manifest required to run our Lambda function.

Resources:

BlogTest:

Type: AWS::Serverless::Function

Properties:

Runtime: nodejs14.x

Architectures:

- x86_64

Handler: dist/index.handler

MemorySize: 128

Timeout: 200

Environment:

Variables:

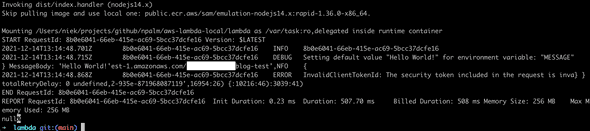

QUEUE_URL:We can simply invoke the Lambda via a CLI command. Before invoking SAM, ensure you have build the sources, yarn run build. And set the profile for AWS, export AWS_PROFILE=blog-test.

sam local invokeThe log output should look very familiar to the one you know from CLoudWatch.

Another option is to start the Lambda and use the AWS CLI to invoke SAM.

sam local start-LambdaOpen a second terminal and use the AWS CLI and invoke the Lambda, since our Lambda does not require any input we do not provide an event.

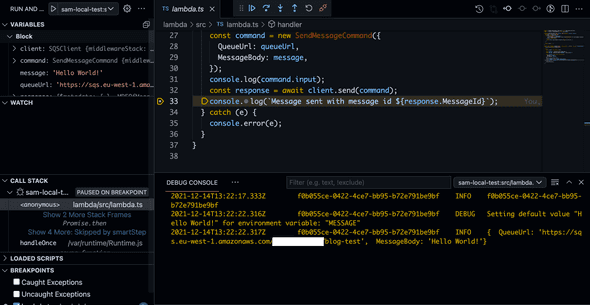

aws Lambda invoke --function-name "BlogTest" \

--endpoint-url "http://127.0.0.1:3001" --no-verify-ssl out.txtThis all works, but it requires first building sources. And attaching a debugger would require most likely several Google searches to find the right way to configure your IDE. But there is an easier option, for which you do not have to take care of compiling code. And you can easily attach a debugger in VSCode. The AWS Toolkit helps you create a launch configuration, see the AWS detailed instruction here. We added the following launch configuration. The sample below was generated with the AWS Toolkit, ensure you update the ref to the LambdaHandler, set the credentials and environment variables.

{

"configurations": [

{

"type": "aws-sam",

"request": "direct-invoke",

"name": "Lambda-local-test:src/Lambda.handler (nodejs14.x)",

"invokeTarget": {

"target": "code",

"projectRoot": "${workspaceFolder}/",

"LambdaHandler": "src/Lambda.handler"

},

"Lambda": {

"runtime": "nodejs14.x",

"payload": {},

"environmentVariables": {

"QUEUE_URL": "<QUEUE_URL>"

}

},

"aws": {

"credentials": "profile:blog-test"

}

}

}Now we can start a debugging session via VSCode. Almost similar to the option for Node but without a wrapper. Drawback is the boot time is a bit slower. SAM is building the sources, and starting a Docker container to before the function is invoked.

Conclusion

We have explored several options for running and debugging an AWS Lambda in a local developer environment. I can recommend AWS Toolkit for VSCode with SAM. It lets you easily test and debug the Lambda locally. If you really want to bring speed to your development, you could consider a tiny wrapper and avoid the heavy lifting of the AWS Toolkit. In case you are all in with AWS SAM or the Serverless Framework I would stick to that framework.