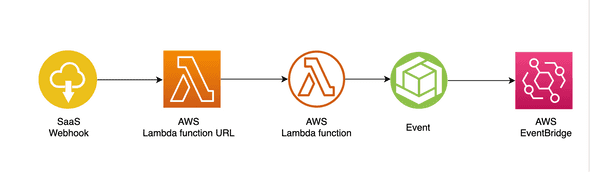

In this post, we explore (POC) how we can deliver GitHub evens to the AWS EventBridge and deliver the events to different targets.

The context

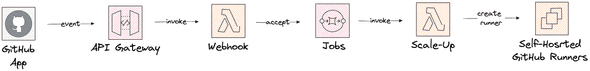

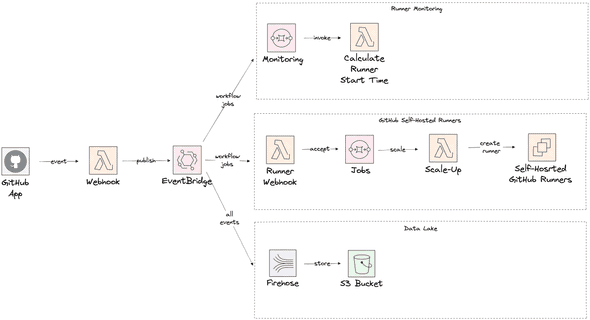

With the growing usage of GitHub as well as scaling more self-hosted runners we found a strong need to act more on events sent by GitHub. For the Self-Hosted runners we already receiving GitHub events via a webhook. The events are processed by the control plane for scaling the runners. As you can see in the image below events are directly passed from a Lambda to the SQS queue, which makes it hard to use the same event for another independent task.

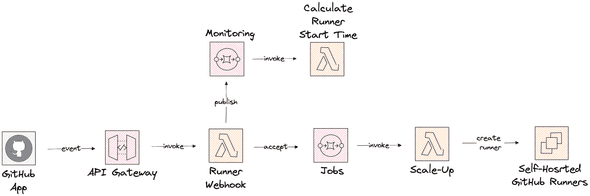

With this runner’s solution, we already had the need to process the workflow job evens for monitoring purposes. For example, to calculate the time a job is started after the first event is created, e.g. the job was queued. We introduced a secondary queue to deliver events for analytics. A simple hack but it does immediately not feel right.

The webhook provided by the runner solution is tailored to only accept workflow_job events, but we are interested in more events. There are numerous events in which we are interested. Examples are triggering a compliance process when a repository is created, triggering an alarm if even an admin makes a repository public, gather analytics for the lead time of a pull request. Even when we don’t combine a solution, the current solution is not flexible enough to process all kinds of different events with different needs.

What’s the experiment

Since GitHub and AWS Cloud are a given, we looking for a better solution to handle the events. And avoiding creating a new webhook, or app for every event we are interested in. We are looking for an event-driven solution to process GitHub events. We distinguish two main use cases. A hot stream of events to act on directly, and a cold stream for analytics. AWS typically provides you the building blocks, allowing you to compose the solution in several ways. Let’s quickly explore our options:

- Simple Notification Service (SNS): A lightweight serverless option. A producer (webhook) publishes messages on a topic and subscribers can receive notification via an HTTP/HTTPS endpoint, email, Kinesis, SQS, Lambda, and SMS. With a filter, a subset of messages can be received.

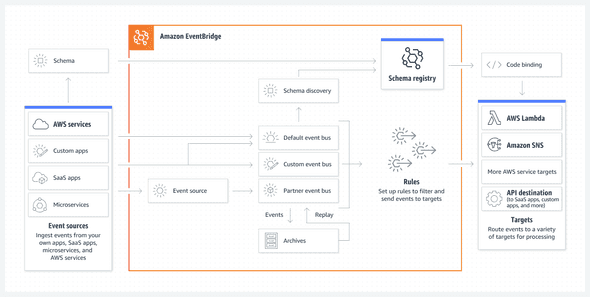

- EventBridge: A lightweight serverless option. A producer can (webhook) can publishes messages on the bus. With rules messages can be delivered to targets. Example targets are Lambda, API endpoints, SQS, SNS, Redshift, and many more. The EventBridge also provides an option to redeliver events based on a message Archive.

- Kinesis: A serverless option for handling continuous streams of data in real-time. Kinesis is based or inspired by Kafka, AWS native, and opinionated.

- Managed Kafka (MSK): A server-based option to handle continuous data streams. Kafka is highly configurable and will support most of the use-cases but also is more expensive and requires more knowledge to manage.

We are looking for a Serverless approach since we have in general not a constant or equally distributed load. And we have a strong preference for a SaaS-managed service instead to have to manage our own services. With these requirements, the AWS EventBridge seems a logical candidate to investigate.

The AWS EventBridge allows you to publish from several sources messages. Based on rules messages can be transformed and routed to several targets such as SQS, SNS, Lambda, RedShift, Firehose Data Steam, API endpoint, and many more.

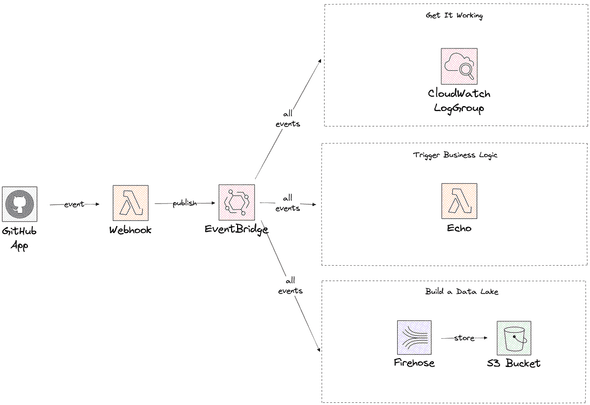

In this POC we quickly explore how hard it is to set up an integration between GitHub and the AWS EventBridge to capture events and deliver the to different targets. For the experiment, we limit ourselves to the following targets.

- CloudWatch LogGroup: can we get the integration working?

- Lambda: Can we trigger custom business logic based on events?

- Firehose Data Stream: Can we build a Data lake in S3?

As the final step, we will re-play the events sent earlier.

⚠️ Code and Terraform examples are not optimized or hardened ⚠️

Let’s do it!

Deliver GitHub events to CloudWatch LogGroup

As mentioned we use Terraform as IaC framework. The first resource we need is the event bus. To be able to replay messages later, we create an event archive as well.

locals {

aws_region = "eu-west-1"

prefix = "blog"

}

resource "aws_cloudwatch_event_bus" "messenger" {

name = "${local.prefix}-messages"

}

resource "aws_cloudwatch_event_archive" "messenger" {

name = "${local.prefix}-events-archive"

event_source_arn = aws_cloudwatch_event_bus.messenger.arn

}The next question is, how can we deliver events from GitHub to the EventBridge? In GitHub, you can define an App with a webhook and subscribe to events. Events will be delivered to the webhook signed with a secret. Be aware that only events for repositories are sent when the app is installed in that repository. An easier alternative is to create a webhook on the enterprise, organization, or repository level.

Now we know how we can send events, we need a way to receive them in AWS and put them on the EventBridge. AWS recently announced a quick start to deliver GitHub events to the EventBridge.

The AWS quick start creates a Lambda function with an endpoint that you can configure in GitHub as a webhook. The Lambda checks the signature before delivering the messages to the Event Bus. Amazon provides a CloudFormation template that deploys a webhook to receive GitHub events and publish them on the bus. This setup through the web console is straightforward. It is not clear how the Lambda function code is maintained. Also, there seems not a way to lock the version of the code, the only way to do this is to maintain the code and CloudFormation template yourself. The provided Lambda does not provide any debug logging or configuration option in case you would change the source. GitHub also does not guarantee a max message size, and the AWS EventBridge only allows messages smaller than 256KB. When you would handle large messages differently, Lambda provides no option. Amazon suggests in those cases to download the Lambda from the console and maintaining it yourself. You can also fetch the Lambda zip from S3.

aws s3 cp s3://eventbridge-inbound-webhook-templates-prod-eu-west-1/lambda-templates/github-lambdasrc.zip).Since we already build a webhook to capture the GitHub events, stripping this function down and delivering the messages to the event bus is fairly simple. For now, we build our own function to keep more control. Giving us the option to take action on messages we cannot accept or add more logging. Later we can still decide to move to the AWS route. A webhook to handle the event in TypeScript looks as below, check the full sources on GitHub.

export async function handle(headers: IncomingHttpHeaders, body: string): Promise<Response> {

const { eventBusName, eventSource } = readEnvironmentVariables();

const githubEvent = headers['x-github-event'] as string || 'github-event-lambda';

let response: Response = {

statusCode: await verifySignature(githubEvent, headers, body),

};

if (response.statusCode != 200) return response;

// TODO handle messages larger than 256KB

const client = new EventBridgeClient({ region: process.env.AWS_REGION });

const command = new PutEventsCommand({

Entries: [{

EventBusName: eventBusName,

Source: eventSource,

DetailType: githubEvent,

Detail: body,

}]

});

try {

await client.send(command);

} catch (e) {

logger.error(`Failed to send event to EventBridge`, e);

response.statusCode = 500;

}

return response;

}Next, we deploy the function with a function endpoint, keep in mind this endpoint is open to the world. The signature check validates the message. Creating a Lambda with a function url requires Terraform resources as described below. See here the full example.

resource "aws_lambda_function_url" "webhook" {

function_name = aws_lambda_function.webhook.function_name

#qualifier = "${var.prefix}-github-webhook"

authorization_type = "NONE"

}

resource "aws_lambda_function" "webhook" {

filename = local.lambda_zip

source_code_hash = filebase64sha256(local.lambda_zip)

function_name = "${var.prefix}-github-webhook"

role = aws_iam_role.webhook_lambda.arn

handler = "index.githubWebhook"

runtime = "nodejs18.x"

environment {

variables = {

EVENT_BUS_NAME = var.event_bus.name

EVENT_SOURCE = "github.com"

PARAMETER_GITHUB_APP_WEBHOOK_SECRET = "SOME_BETTER_SECRET"

}

}

}

# roles and policies omitted

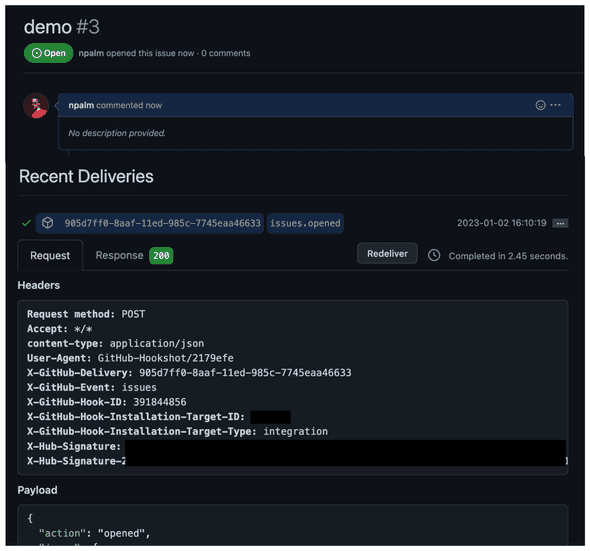

After creating the Terraform resources we can test the webhook. A simple way to test events is by creating a test issue and ensuring your App or webhook is subscribed to events on issues. For the App, you can check the status of events in the advanced section of the App settings. You can redeliver the event here as well, quite handy for testing!

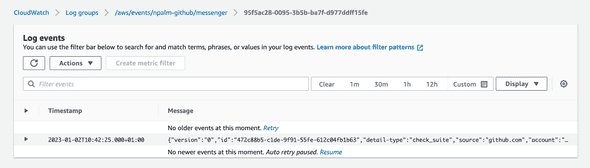

After creating an Issue we should see in AWS that our Lambda is triggered. On the Eventbridge, we can’t see the event. The next step is to deliver the event to a target. The first target we define for the test is a CooudWatch Log Group. Just for testing purposes.

Before events can be delivered to a target, an event rule needs to be created. For now, we forward all events from GitHub to the rule.

resource "aws_cloudwatch_event_rule" "all" {

name = "${local.prefix}-github-events-all"

description = "Caputure all GitHub events"

event_bus_name = aws_cloudwatch_event_bus.messenger.name

event_pattern = <<EOF

{

"source": [{

"prefix": "github"

}]

}

EOF

}

To an event rule, a target can be connected. Check the AWS developer documentation for the options. We limit ourselves to a few targets. First, we create the target log group.

resource "aws_cloudwatch_log_group" "all" {

name = "/aws/events/${local.prefix}/messenger"

retention_in_days = 7

}We define a small Terraform module to define the target rule.

module "event_rule_target_log_group" {

source = "./event_rule_target_log_group"

target = {

arn = aws_cloudwatch_log_group.all.arn

name = "/aws/events/${local.prefix}/messenger"

}

event_bus_name = aws_cloudwatch_event_bus.messenger.name

event_rule = {

arn = aws_cloudwatch_event_rule.all.arn

name = aws_cloudwatch_event_rule.all.name

}

}The module we declared creates an event rule target to a log group and ensures the right IAM permissions are set. A similar pattern we apply later for Lambda and Firehose as targets of an event rule.

resource "aws_cloudwatch_event_target" "main" {

rule = var.event_rule.name

arn = var.target.arn

event_bus_name = var.event_bus_name

}

data "aws_iam_policy_document" "main" {

statement {

actions = [

"logs:CreateLogStream",

"logs:PutLogEvents",

]

resources = [

"${var.target.arn}:*"

]

principals {

identifiers = ["events.amazonaws.com", "delivery.logs.amazonaws.com"]

type = "Service"

}

condition {

test = "ArnEquals"

values = [var.event_rule.arn]

variable = "aws:SourceArn"

}

}

}

resource "aws_cloudwatch_log_resource_policy" "main" {

policy_document = data.aws_iam_policy_document.main.json

policy_name = replace(var.target.name, "/", "-")

}Time to trigger another event. As a result a log message in CloudWatch should appear.

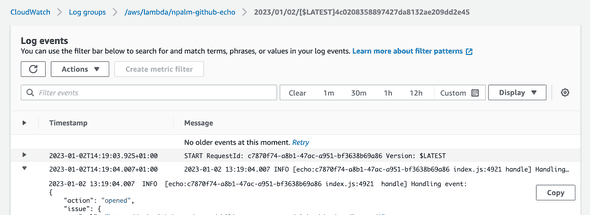

Trigger a Lambda

Let’s check if we can do some more interesting things. Like sending a message to a Lambda. For that, we need a simple function. We keep it very simple and only echo the message. But you can imagine that you can replace this with any business logic required.

export async function handle(event: Schema): Promise<void> {

logger.info("Handling event: " + JSON.stringify(event));

}Details for the echo Lambda function are implemented in a module, similar to the webhook discussed earlier. This means we only have to add the modules to our main.tf. The module echo is implementing the Lambda function and declares the required Terraform resources. The second module, event_rule_target_lambda is similar to the one we created fom the log group before. But now tailored to a Lambda target.

module "echo" {

source = "./echo"

prefix = local.prefix

}

module "event_rule_target_lambda" {

source = "./event_rule_target_lambda"

target = {

arn = module.echo.lambda.arn

name = module.echo.lambda.function_name

}

event_bus_name = aws_cloudwatch_event_bus.messenger.name

event_rule = {

arn = aws_cloudwatch_event_rule.all.arn

name = aws_cloudwatch_event_rule.all.name

}

}When you trigger a new event or update the issue created before. The Lambda will be invoked and in the logging of the lambda, the event is printed. Not very useful, but remember we only check the pattern here.

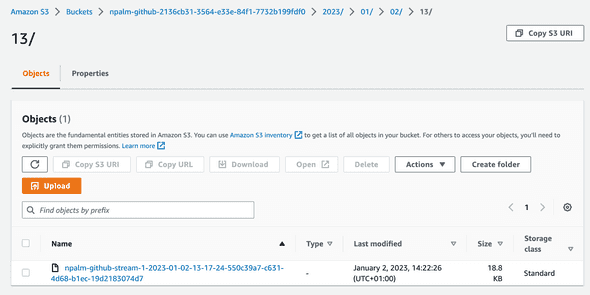

Build a Data Lake in S3

The last target we checking now is Firehose delivery stream. With the Firehose stream, we deliver the message to S3 to mimic a Data Lake. With the following Terraform resources, you create a Firehose delivery stream to S3, configuration is standard. This means that data is cached till 5MB is received or 300 seconds are passed.

resource "random_uuid" "firehose_stream" {}

resource "aws_s3_bucket" "firehose_stream" {

bucket = "${local.prefix}-${random_uuid.firehose_stream.result}"

force_destroy = true

}

resource "aws_s3_bucket_acl" "firehose_stream" {

bucket = aws_s3_bucket.firehose_stream.id

acl = "private"

}

data "aws_iam_policy_document" "firehose_assume_role_policy" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["firehose.amazonaws.com"]

}

}

}

resource "aws_iam_role" "firehose_role" {

name = "${local.prefix}-firehose-role"

assume_role_policy = data.aws_iam_policy_document.firehose_assume_role_policy.json

}

resource "aws_iam_role_policy" "firehose_s3" {

name = "${local.prefix}-s3"

role = aws_iam_role.firehose_role.name

policy = templatefile("${path.module}/policies/firehose-s3.json", {

s3_bucket_arn = aws_s3_bucket.firehose_stream.arn

})

}

resource "aws_kinesis_firehose_delivery_stream" "extended_s3_stream" {

name = "${local.prefix}-stream"

destination = "extended_s3"

extended_s3_configuration {

role_arn = aws_iam_role.firehose_role.arn

bucket_arn = aws_s3_bucket.firehose_stream.arn

}

}

And like before we have created module to connect the Firehose as the target to the event rule.

module "event_rule_target_firehose_s3_stream" {

source = "./event_rule_target_firehose_s3_stream"

target = {

arn = aws_kinesis_firehose_delivery_stream.extended_s3_stream.arn

name = aws_kinesis_firehose_delivery_stream.extended_s3_stream.name

}

event_bus_name = aws_cloudwatch_event_bus.messenger.name

event_rule = {

arn = aws_cloudwatch_event_rule.all.arn

name = aws_cloudwatch_event_rule.all.name

}

}And to test our Data Lake we have to trigger some events again. After 5 five minutes you should see the first objects appearing in the S3 bucket.

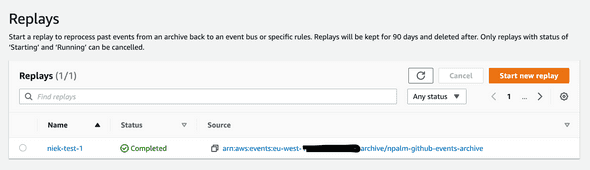

Replay

The final question we would like to answer is, can we replay messages. We already have created a message archive. A replay can be initiated via the web console or CLI. In the web console navigate to the EventBus and select Replay. Next set the time windows and source for which you replay messages. After starting the job you should see messages appearing again on the targets.

Conclusion

Delivering messages from GitHub to AWS is straightforward with the AWS Eventbridge. As mentioned have some doubts to use the Amazon integration with GitHub today. Since it is not clear how to code is managed. And when not using Terraform you have to extract the webhook code from the CloudFormation template or Lambda. But on the other hand, this is nothing keeping us back. You should make your own judgment here. At this moment we will most likely build an maintain our own Lambda to ensure we can deal properly with cases when the messages exceed the maximum size of 256KB supported by the EventBridge.

Messages delivered to the EventBridge are forwarded to targets based on rules. In a rule, you specify matching criteria. This can be coarse-grained like we did. We matched the event only based on the source. When there is no EventRule that matches a message, the message ends up in /dev/null and you will get no notification that you missed something. This is not a problem, but should be considered and is more or less the opposite of SNS where you get all the messages unless you filter.

Looking at our current hacky approach, the way we delegate events for monitoring purposes to a second SQS queue, a move to AWS EventBridge seems much more flexible. And avoid tailoring the code. With a move to the AWS EventBridge, our solution could transform as follow.

The EventBridge is priced roughly with 1$ per 1 million messages published calculated in blocks of 64KB. Today we handle roughly 1 million messages a month for only the workflow_job event. When we start listening for many events this will likely double a few times. Besides that, you have to think about the costs of running the Lambda to handle the events. And finally, you will make costs to process the events.

Rate limits and quotas should also be considered. The EventBridge quotas for PutEvents per region vary from 10.000 per second to 400 per second. The messages posted on the EventBirdge should not exceed 256KB, similar to SQS and SNS. It seems many events in GitHub are relatively small, but for example, the push event can be big in case many branches and tags are pushed at once. When you start writing Lamba’s to handle events that require GitHub API calls, you should also think about the GitHub API rate limits. Using a personal access token (bad idea) you have a limit of 5000 API calls per hour. For a GitHub App this can be up to 15.000 per hour.

The AWS EventBridge seems to be a good option to start building a solution to handle GitHub events for building a Data lake as well as acting directly on events. In case you keep your architecture nicely lost coupled you can always move to the Ferrari, Kafka, for Eventing.